A Brief History of U.S. Research Funding

Before World War II, the federal government didn’t fund research. But after scientists with the Manhattan Project helped win the war, the U.S. was convinced that university research was a great national investment. Until now. A look back at how we got here.

It was August 2023 and Casey Harrell and his family were weeping tears of joy. Harrell, who has ALS, was able to speak the first words his five-year-old daughter had ever heard him say, thanks to a brain-computer interface that translates brain signals into speech with up to 97 percent accuracy. The breakthrough that allowed Harrell to speak was the work of a consortium of scientists called BrainGate, led by Brown researchers and school of engineering alumni. The funding came from the federal government, specifically grants from the National Institutes of Health (NIH).

Twelve years earlier, in 2011, Brown had hired Lawrence Larson as first dean of the newly elevated School of Engineering. Larson had been a department chair at the University of California-San Diego. Then, and now, UC San Diego was one of the nation’s top schools for securing federal research grants, and Larson said it was no secret that Brown hoped he could bring some of that same mojo to College Hill.

“I was basically brought to Brown in order to try to up our research profile,” Larson, now a Brown engineering professor, said in a recent interview, recalling his dozen years as dean. Thanks to a generous era of grants from federal agencies such as the National Science Foundation (NSF), Larson largely accomplished his mission. As it roughly doubled outside research funding, the School of Engineering set about hiring top academic talent and building the $88 million Engineering Research Center, with spacious new labs in state-of-the-art fields such as nanotechnology.

It was this growing and fruitful partnership with Washington that sparked the breakthrough that allowed Casey Harrell to speak to his daughter for the first time ever. “Not being able to communicate is so frustrating and demoralizing. It is like you are trapped,” Harrell said at the time. “Something like this technology will help people back into life and society.”

Harrell’s computer-aided speech grew the long list of innovations credited to the 80-year partnership between the federal government and America’s top universities that—at its best—has cured or diminished diseases, made America the global leader of a so-called knowledge economy, and turned the United States into a magnet for top scientists from all over the world. But this poignant moment of discovery may also have foreshadowed the end of an era.

Building an American empire of knowledge

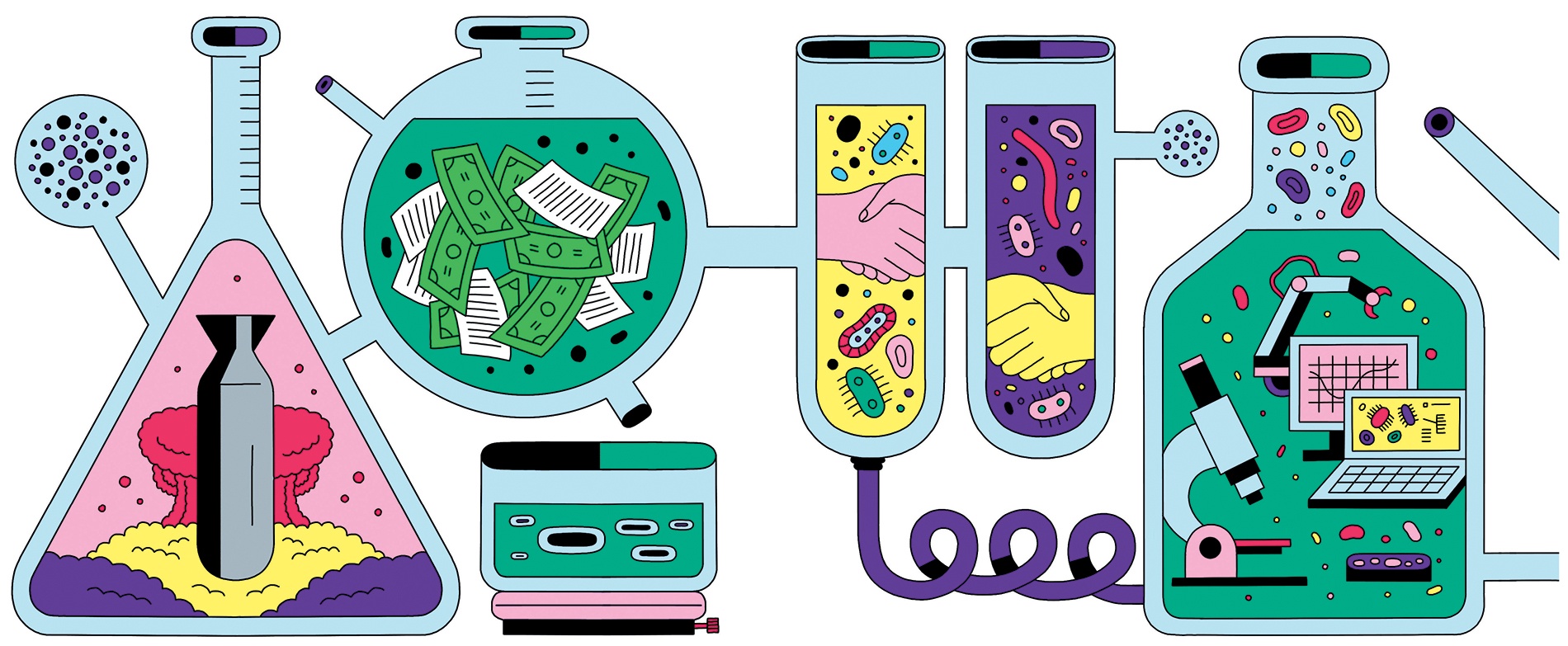

Before the January arrival of a new administration in Washington precipitated seismic shifts in the landscape for federal research funding, the history of the modern research ecosystem in American academia was almost literally the story of acceleration from zero to 60. Zero was essentially the level of federal research support before the start of World War II, while in 2023, $60 billion flowed from the government in D.C. to America’s universities to invest in research of all kinds.

“The quest for knowledge could replace the vanishing American frontier as the new source of American freedom and creativity.”

It’s a partnership that has been so mutually beneficial that it’s hard to believe it might never have happened. The role that university-based scientists played in the Allied World War II victory—most famously with the Manhattan Project that produced the atom bomb, but also with critical innovations from advanced radar to penicillin—sparked a national conversation as the war ended in 1945 about how to sustain that effort. And two critical, intertwined decisions—to support basic science and to do so through grants to top schools like Johns Hopkins or MIT, rather than a centralized government agency—were the choices that launched an American empire of knowledge.

“They said, essentially, that we want to be efficient but we don’t want to be too socialist,” says Luther Spoehr, Brown senior lecturer emeritus in education, who teaches the modern history of universities. Led by the father of the government-academia partnership—the MIT professor Vannevar Bush, who supervised the Manhattan Project and pressed for the creation of the NSF—a forward-looking Washington urged top scientists to compete for federal grants with their best ideas.

Brown was not at the head of this parade. In those postwar years, it boosted its national reputation more through an emphasis on undergraduate teaching and curriculum than through research superstars. But while it is still not ranked near the top in federal research dollars, it has steadily—perhaps inevitably—gotten on board. Now, the growth of research at Brown’s School of Engineering has been overtaken by federal investment in the life sciences, which these days account for nearly 70 percent of Brown’s research portfolio through grants centered at the Warren Alpert Medical School and School of Public Health. In all, federal grants to Brown reached roughly $250 million a year through the 2020s.

The virtuous cycle of upward investment that drove the Cold War “space race,” a government war on cancer, the creation of new computers, and an internet that powered Silicon Valley was so popular and so noncontroversial that it mostly didn’t occur to its key players that someday a federal administration might express open hostility toward science and cultural grievances against higher education. Or that a White House might use its huge financial leverage to demand radical change.

And yet polls report that growing numbers of voters fear that colleges are steeped in what some have claimed is liberal indoctrination. Since the change in federal administrations in January, the government has stunned academia with sudden and often unexplained funding freezes in core programs like the NIH and NSF, but also targeted attacks on campuses from Harvard to the University of Virginia over issues like diversity programs, transgender athletes, and the conduct of protesters during 2024’s pro-Palestinian encampments.

Along with a handful of leading research universities, Brown has found itself in the middle of this maelstrom. In April, University officials braced against a threatened halt in $510 million in federal funding as the government investigated Brown’s approach to combating antisemitism and to diversity, equity, and inclusion (DEI) programs. In addition, ongoing pauses in the NIH grants that make up 70 percent of Brown’s federal research dollars had a devastating impact. In late June, in extending a campuswide hiring freeze, University President Christina Paxson said Brown was losing $3.5 million a week in unpaid bills from existing NIH grants. Then, on July 30, she announced an agreement to restore the flow of funds (see “Brown cuts a deal,” at right).

Columbia and the University of Pennsylvania made deals before Brown did, while Harvard, Northwestern, Cornell, and Princeton were, at press time, among the institutions still facing funding threats. With possible courtroom fights ahead involving some of these institutions, the federal pullback is likely to remain the biggest story at college campuses across the nation as the new academic year begins. But the ongoing crisis also raises questions about the past. How did we get to this place where America’s top universities became so dependent on research dollars from Washington—a Jenga block increasingly propping up the research enterprise in higher education, now threatened by a federal administration toying with pulling it out?

The story is very much an affirmation of a cliché, that necessity is the mother of invention. In 1943, the necessity was defeating the Nazis and winning World War II.

Exploring a new American frontier

Vannevar Bush was one of the most significant Americans of the 20th century that most Americans have never heard of. A top physicist at MIT, Bush was a man who had ideas ahead of his contemporaries—as a 1930s computing pioneer who developed concepts that later evolved into the internet—and a great sense of timing. Moving to Washington in 1939 to head the Carnegie Institution for Science, Bush became a leading adviser to President Franklin Roosevelt when World War II erupted. As chair of the National Defense Research Committee, he became Washington’s point man for the Manhattan Project, helping recruit America’s top university physicists—most notably the University of California’s J. Robert Oppenheimer—to build the world’s first atomic bomb in the New Mexico desert.

The contrast between the new American model of academic freedom and competitive grants and the Soviet system of rigidly controlled government- directed research was a tale that D.C.’s new spin doctors wanted to tell.

While the Manhattan Project and Oppenheimer became legends, they were also the leading edge of a bevy of scientific breakthroughs, driven by the urgency of war, that gave U.S. troops and their allies an advantage in both Europe and the Pacific. These included the mass production of penicillin, which saved thousands of wounded soldiers, the development of advanced radar, and the proximity fuze, which triggers a bomb to explode as it nears its target.

In 1944, as the tide turned in the Allies’ favor, Roosevelt was increasingly frail yet seeking an unprecedented fourth term. He made two consequential decisions that changed the future of higher education in America. He supported and signed the law known as the G.I. Bill, which offered a free college benefit for veterans that kicked off decades of rising college enrollment. But he also asked Bush to craft a report on how the government could continue to foster scientific innovation after the war—partly out of hopes that new technology could continue to sustain a suddenly booming U.S. economy.

“The fear as the war was coming to a close was that maybe the depression would resume,” says G. Zachary Pascal, author of the biography Endless Frontier: Vannevar Bush, Engineer of the American Century. What’s more, Bush had noted that foreign-born scientists had played a key role in the Manhattan Project, fueling his desire to train more American experts. Pascal said Bush saw “the need for the U.S. to produce, to create, and spawn more scientists and researchers in their own country.”

Bush’s landmark report—Science: The Endless Frontier, published in July 1945, just days before the first A-bomb was dropped on Hiroshima—built on his longtime ideas that, as Pascal wrote, “the quest for knowledge could replace the vanishing American frontier as the new source of American freedom and creativity.” Bush argued that—in the post-war world—this would mean putting faith in the top academic experts and backing their ideas with government grants, sponsoring basic science instead of narrowly targeted projects.

The elevated prestige of Bush after the Manhattan Project all but assured that he would have little trouble convincing new President Harry Truman to back his plan, although Truman did insist that his proposed National Science Foundation not have an independent board but come under administration control—a decision that would have consequences 80 years later. Even so, it took five long years for Congress to finally fund the NSF with Bush as its founding chief; other agencies like the Naval Office of Research and the Atomic Energy Commission, which flowed out of the Manhattan Project, filled the gap as the Cold War with the Soviet Union heated up on World War II’s smoldering embers.

In many ways, that conflict with the USSR shaped the first decades of Washington’s growing relationship with universities, not just in the substance of the research—from building even more powerful atomic weapons to the accelerating race to send satellites and humans into space—but also in the approach. The Cold War was in many ways defined by increasing use of propaganda, and the contrast between the new American model of academic freedom and competitive grants and the Soviet system of rigidly controlled government-directed research was a tale that D.C.’s new spin doctors wanted to tell.

“Science in the United States was supposed to be basic science,” says Audra J. Wolfe, the author of Freedom’s Laboratory: The Cold War Struggle for the Soul of Science. “It wasn’t supposed to be applied. It wasn’t supposed to be materialist. It wasn’t driven by material needs of the people as you have under Communism. It was driven by the world of ideas, and that science in the United States could be intensely international and cooperative.” That narrative was meant to appeal to the world’s developing nations, many on the precipice between communism and capitalism.

Becoming a global beacon for scientific progress

Despite its historical significance, federal funding of campus research in the early 1950s was just a fraction of what it would become by its 2024 peak, and it was heavily concentrated in a handful of schools such as Johns Hopkins, MIT, Stanford, and Berkeley. But the race to expand research and compete for government grants would touch almost all of academia, including Brown. During the 1950s, the university’s engineering department began a long era of expansion, while the university attracted top émigré scientists such as William Prager, who had left his native Germany in 1933 and launched Brown’s applied math department. The flood of foreign scientists served as confirmation that America was becoming the global beacon for scientific progress.