The Skinny on Big Data

It may allow corporations to know more about you than you’d like—but it can also be a force for good.

The definition of “big data” is a moving target, the scope ever larger as more of our world is digitally recorded, and as computers and algorithms get better and faster at connecting reams of seemingly random data points. Your Facebook likes, your 5th-grade standardized test results, how many Band-Aids sold last year at your local pharmacy, how many times a piece of factory equipment was serviced in 5 years—these data reveal patterns, trends, even truths. And yes, these tools allow corporations to increase profits by predicting consumer behavior—but they may also help solve poverty, detect cancer, and explore new areas of particle physics.

The Shape of Cancer

The exponential growth of social media is often touted as a driving force in big data, but Twitter has nothing on your body. The data generated by your DNA would dwarf even the most manic Tweetstorm.

Continuing increases in computing power can’t keep up with demand. “You can’t use brute force anymore. I don’t have the computing power to do that,” explains Lorin Crawford, assistant professor of biostatistics. “Your body is not linear. Part of our challenge is to figure out how to search in an intelligent and less computationally costly way.”

Crawford’s lab is involved in refining our ability to understand the genetic code, understanding resistance to cancer drugs, and using big data to advance medical science. His current obsession is glioblastoma multiforme (GBM), an aggressive and usually deadly cancer that begins in the brain. It’s typically diagnosed via magnetic resonance imaging (MRI), and researchers have a huge library of MRIs. Crawford’s lab is converting these complex three-dimensional shapes into statistics, which can then be mapped to genetics and other variables. The goal is to understand how and when different mutations lead to different tumor shapes. If successful, the analysis could act as a kind of digital biopsy, identifying possible mutations and suggesting treatment options.

Big Opportunity

Can big data rescue the American Dream? That’s the ambitious, lift-all-boats hope of Brown Associate Professor of Economics John Friedman, who co-directs the Equality of Opportunity Project with two colleagues from Harvard. With major foundation support, they scour income and social welfare data to locate and devise policy solutions that fuel families on the road out of poverty.

For Americans born seventy years ago, a remarkable 90 percent were able to make better lives than their parents. Only about half of Americans born in 1980 have managed to reach past their parents’ bootstraps. Perceived lack of opportunity, says Friedman, helps drive our current political malaise: “This is part of a deep frustration about how difficult it is for themselves, and especially for their kids, to feel like each generation is moving forward.”

Surveys have long been a primary tool of economists, but are expensive and limited by the power of recall. Friedman prefers big data—particularly big government data—which allows the tracking of outcomes over time. “Being able to work with what is, effectively, the full population obviously gives you quite a bit more power to understand what’s going on,” he says.

What big data helps catch is local differences that can provide a blueprint for intervention. “There is an enormous amount of variation,” says Friedman. For example, a child growing up in Atlanta from the bottom fifth socioeconomically has a 4.5 percent chance of reaching the top fifth in adulthood. In Seattle, their chances more than double, to 10.9 percent. “These differences are causal,” he says. “If you move from Atlanta to Seattle, your children will do better.” Their newsmaking “Opportunity Atlas” map charts life success at a neighborhood level.

You can’t exactly tell Atlanta to be more like Seattle. But big data can help isolate the variables that matter. “Providing opportunity for children requires local action,” Friedman says. “It’s going to be special and specific to each setting.”

The Math of God

In 2012, when Professor of Physics Meenakshi Narain and more than 10,000 collaborators confirmed the existence of the Higgs boson, or God particle, it would have been very hard to show their math. The European Organization for Nuclear Research—better known by the French acronym CERN—maintains a staggering 20 percent of global computing resources.

That’s what it takes to detect a particle that exists for less than a nanosecond. CERN’s Large Hadron Collider (LHC) is housed in a 16.6 mile circular tunnel under the Alps, where proton beams collide. The resulting impacts—up to a billion per second—emit showers of particles captured by an array of detectors that weighs more than the Eiffel Tower.

Data surges out of LHC faster than it can be recorded, creating the biggest of big-data challenges. Complex algorithms sort it on the fly, so that only the most promising 1 percent is captured. Still, it’s like looking for needles in a field full of haystacks: “We’re looking for 100 events of the Higgs boson in a trillion events of the known,” explains Narain.

In July, 2018, the team announced a followup discovery: the Higgs boson decaying into fundamental particles known as bottom quarks. To date, LHC has produced one exabyte of data. By 2026, it will create 30 exabytes. “Even though it’s huge, it’s still not huge enough,” laughs Narain.

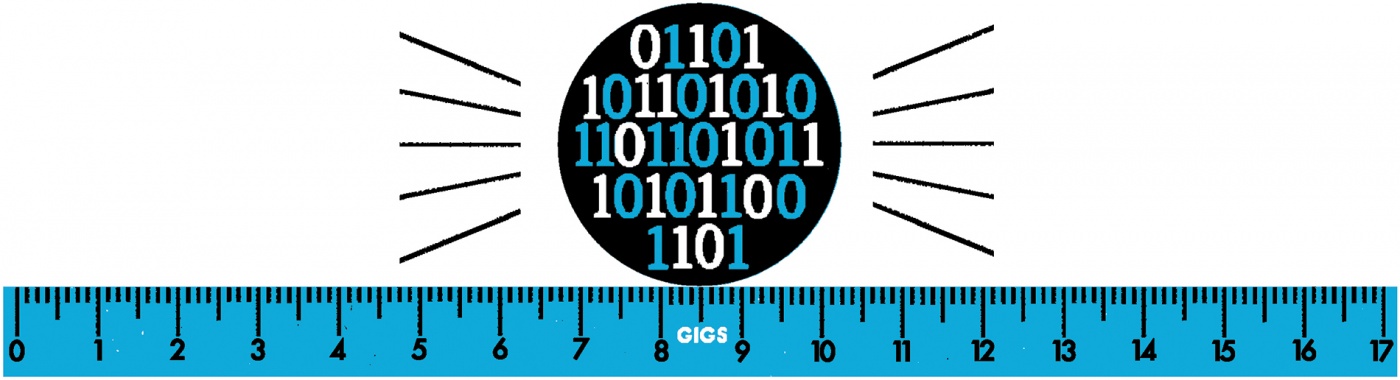

How Big is “Big”?

You may know that 2 megabytes means you have a nice printable photo. But what are zettabytes? Julian Bunn, a computational scientist at Caltech, helps people wrap their heads around the bigger bytes. A sampling of his work:

1 byte: a single character

1 gigabyte (giga=billion): a pickup truck filled with paper

2 terabytes (tera=trillion): an academic research library

2 petabytes (peta=quadrillion): all U.S. academic research libraries

5 exabytes (exa=quintillion): All words ever spoken by human beings

As for zettabytes (sextillion) and yottabytes (septillion), Bunn didn’t even try. But that’s where we are: in 2017 the research group IDC estimated that world data production was 16.3 zettabytes per year, set to grow to 163 zettabytes per year by 2025.