Artificial Intelligence Is as Unfair as We Are.

A new course asks how we can harness AI without teaching it all of our biases and automating oppression.

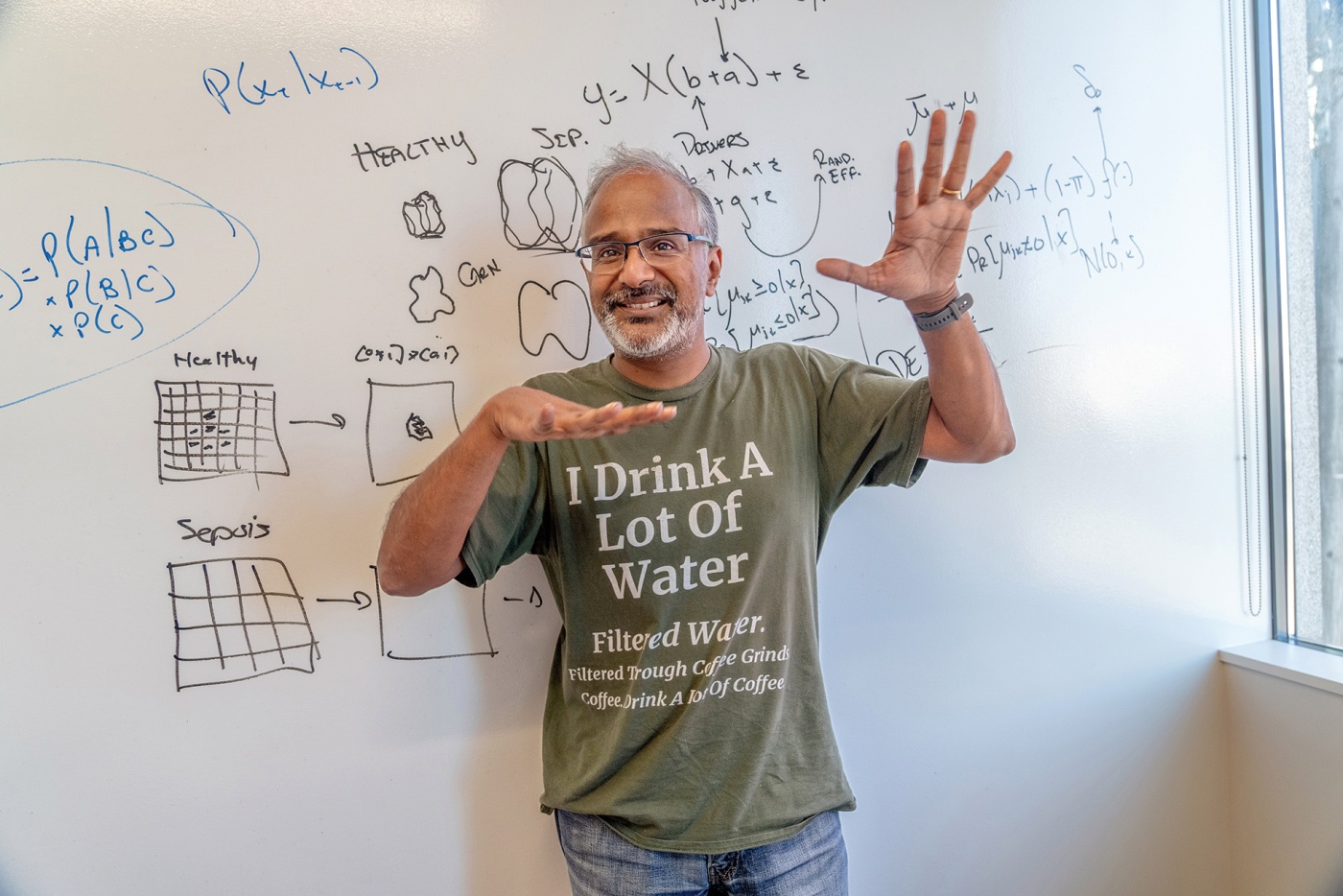

Can an algorithm be racist or sexist? Not exactly, but AI can produce answers that are both wrong and unfair, depending on what data sets the program is being trained on. For example, if an algorithm is 100 percent accurate at identifying skin cancer in white people, and is tested using 90 pictures of white skin and 10 pictures of Black skin, it could receive a cheering accuracy score of 90 percent while failing to correctly identify cancer in a single Black person. If the coder instead measured accuracy for each racial group separately, the algorithm’s weakness would immediately become clear.

Similarly, an algorithm trained on raw data from the 1900s might predict that women are less likely to graduate from college than men. But if a college used that algorithm to admit only the students with the highest predicted likelihood of graduation, it would be illegally discriminating on the basis of gender. An algorithm is a tool that predicts future outcomes based on correlations in the data it is given. Unfortunately, many correlations in historical data sets reflect systems of oppression.

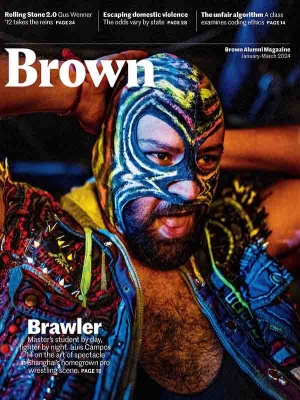

“Once you you get people on board with the idea that we should do something about making sure our systems are fair and unbiased and accountable, the next obvious question is how do you do that?” says Professor Suresh Venkatasubramanian, who premiered CSCI 1951z, Fairness in Automated Decision-Making, last fall. “This class is really trying to answer that.”

Machines & Morality

In 2012, Venkatasubramanian took a sabbatical from teaching computer science at the University of Utah to think about the future of his field. “What will technology look like five years from now?” he asked himself.

His best guess conjured up a world built upon a ubiquity of automated decision-making systems. The thought was exciting but also gave him pause. Already, stories were emerging about the dangerous consequences of algorithmic failures. He decided to start exploring the causes of algorithm bias and researching potential solutions.

His passion for algorithmic fairness may have been quietly ignited, but a little less than ten years after his first forays into the field, Venkatasubramanian was a national expert, serving under President Biden as the assistant director for science and justice in the White House Office of Science and Technology Policy. During his tenure in government, he coauthored the seminal

Blueprint for an AI Bill of Rights. Since 2021, he has also taught Brown computer science students and led Brown’s Center for Technological Responsiblity. Last semester, he premiered the Fairness in Automated Decision Making course to teach the next generation of computer scientists how to put ethics first.

The class assignments involved coding, but his students were well-versed in machine learning and didn’t find the technical aspects difficult. Instead, the course posed a unique challenge: for every piece of code a student built, they were asked to provide a comprehensive, critical analysis of their methods and results.

That’s because Professor Venkatasubramanian believes paying careful attention to methodological details is key to identifying and mitigating algorithmic bias. “The way we choose to design our algorithms, our evaluation strategies, and many other elements have ramifications,” he says. “You may think they’re arbitrary, but they’re not.”

Many times, though, it’s not at all clear how to incorporate ethical principles into technical decision making. Teaching assistant Tiger Lamlertprasertkul ’24 says that he had to approach TA hours differently for this class than any other CS class he’s helped teach. “The way you need to instruct students when they come to hours is like, ‘Okay, this is how we think about it,’” he says. “We don’t really have a solution. We kind of discuss it together.”

For Lamlertprasertkul, it was a welcome change of pace. In other courses, he had felt like students came to office hours just to wheedle answers out of TAs. “In this class, you can see that they’re really curious about the materials, and that’s really nice,” he adds.

Undergraduate Council of Students president Ricky Zhong ’23, one of the roughly 20 students in CSCI 1951Z last year, finds the ambiguity refreshing. “It’s much more relevant and exciting for me to think about the ways that I am going to be building technologies than to just think about how I can make a program run faster,” he says.

Blazing a Trail

From the course’s inception, Professor Venkatasubramanian made it clear that the field of CS didn’t have solutions to many of the questions he was posing—and that he didn’t expect his students to flawlessly bridge the gap between principles and practice.

“I want them to tell their bosses, ‘There are things we can do. They’re not great, but there are things we can start doing,’” he says.

Yet in both the classroom and in industry, “their job should not depend on them coming up with a fairness module that works with a system; their job should be to point out that these discussions need to happen,” he emphasizes.

If the academy doesn’t know what fair algorithms look like, though, where should coders turn for advice on ethical programming? Professor Venkatasubramanian hopes that companies will eventually have internal ethics boards and make anti-bias efforts organizational priorities. Ideally, students entering industry after taking courses about algorithmic fairness could promote ethical practices in the workplace. However, neither the professor nor his students are sure that a “cultural shift” will be enough to make a majority of companies prioritize fair behavior.

Additionally, students like Zhong think the impact of educating future industry members about algorithmic fairness is limited. Courses like CSCI 1951Z might help programmers “think about small things we wouldn’t have realized earlier,” he says, but low-level personal changes do not company priorities make.

“Self-regulation is vulnerable,” Venkatasubramanian concedes. “Corporate honchos have had plenty of time to do it. It hasn’t happened yet.”